A.I. in QA: More Than Just a Bot

Adam Creamer

Mobile applications don’t live out in the world on their own. They are dependent on devices to host them and provide users the value and overall mobile experience the application (hopefully) provides. Therefore, before those apps make it to a user, it’s important to test them on mobile devices which goes one of two ways: the “virtualized” approach with an emulator/simulator or the real device approach where the app is physically installed and tested on a real physical device.

But, these two approaches are not created equal. We won’t go into the major differences between using an emulator/simulator vs using a real device, but I will sum up the argument that has proven why real device testing is the way to go:

If you’re going to test on emulators and simulators, you’ll likely be releasing your application to potential paying customers with a lot of functional, performance, and visual issues. Only real devices offer comprehensive coverage for all three, as only real devices can simulate real-world conditions. After all, your potential customer or user doesn’t use an emulator to request an Uber, video chat with their doctor, or access their bank account.

The key takeaway? If you care about providing a high-quality mobile experience, you’re going to need real devices.

If you are going to test on real devices, that means you’re going to have to somehow procure them, manage them, and make them accessible to your entire team. Or, this was at least how organizations started the real device journey. Every time a new device was introduced to the market, or a new OS was released, somebody from within the organization would have to make sure that they purchased the phone (you might need multiple versions so multiple people can test, or so multiple OS versions can be tested on the same device) and continuously upgrade and downgrade OS versions for thorough testing. If this sounds like a major headache and cost burden, it is. With thousands of devices and operating systems, it simply became unsustainable to host all of the devices on your own and make them accessible to your entire team.

The market has caught on to the fact that it’s unrealistic to own and make physically accessible every single mobile device that’s necessary for proper testing and development. As a result, leading enterprises are turning to Mobile Device Clouds as a solution. Instead of constantly managing and procuring devices in-house, these platforms handle the hard work for you. The best of the best even offer device procurement and multiple options for cloud access. For example, here at Kobiton, we’ll buy the devices for you and host them in our data center or you can “cloud-ify” your own locally-hosted real devices. Or if you want to test on a device you do not have in-house, you can rent on a per-minute basis one of the hundreds of devices in our public cloud. And, of course, we also offer on-premises solutions, but more on that for another article.

This move to the Mobile Device Cloud has been a major value-driver for the enterprise, and a necessity during the ongoing pandemic, as it made highly responsive real devices available to any member of your team, no matter where they are. It also eliminated the need for employees to remain in the office to manage the hosting of their own local devices, as Mobile Device Cloud providers can manage these devices.

However, with every good new thing often comes some sort of initial trade-off. In the case of Mobile Device Clouds, that trade-off looks like time spent waiting and time spent wasted. Let’s dive a bit deeper into each.

Wait time refers to the time that expires between the tester performing an action on a device via browser and that browser actually rendering that device’s response in a new state. While we are becoming more and more accustomed to minimal wait times in our everyday life (i.e. something like 40% of mobile shoppers will leave a shopping cart if the page takes longer than 3 seconds to load), wait time still has remained a problem in the life of the mobile tester. Eventually, this makes its way into the business side of the house, ultimately leading to less time available to test, worse coverage as a result, and slower delivery times.

To help unpack this even more, let’s look at a hypothetical. Let’s assume:

| 10 testers on our team20 days of testing in a month | 6 hours a day spent on testing, per testerOur testers can execute 7 test steps a minute |

Now, let’s do some math:

10 testers x 20 days in a month x 6 hours a day x 60 minutes in an hour x 7 test steps per minute = 50,400 test steps a month

To calculate cost to the testing team, assume a wait-time rate of 500ms (average for the higher-performing device clouds today) per test step. That equates to 7 hours per month just spent waiting.

To calculate the cost to the overall business, assume that each of your ten testers is paid $40 an hour. On top of what is being paid for the actual testing, you need to now factor in that, each month, you’re also paying an extra $280 per tester to wait for a response in their browser. That’s $2,800 a month, $8,400 per quarter, and $33,600 per year.

That $33,600 per year figure isn’t the only thing to take into account when choosing your Mobile Device Cloud provider. Wasted time matters just as much, if not more, to the overall success of your mobile testing and development teams as well as the success of your mobile channels.

When I refer to wasted time, I am referring to the amount of time spent on tests where a manual tester ends up interacting with the wrong screen element due to rendering lag from the device screen and/or browser. The best way to quantify this phenomenon is by measuring input lag.

Input lag is the measurable delay between inputting a command and the action occurring on-screen.1 While often confused with response time, there are some key differences. For example, game manufacturers care about input lag as it manifests as the time between hitting a button on your controller and when the command happens on screen. Response time, on the other hand, is very important when managing and building a retail site, as it’s going to impact the time between a user clicking “Add to cart,” and the next screen rendering with that item in their cart.

When it comes to building the perfect Mobile Device Cloud, we see both input lag and response time as crucial components to optimize cloud services for, but it’s the input lag that actually causes mistakes to be made during manual testing that often will lead to improper coverage and bugs leaking into the hands of potential customers and users.

Let’s take a moment to try and quantify the impact of input lag and start to introduce why we, at Kobiton, have made it a major priority. Anybody who has ever performed a manual test on a real mobile device in the cloud is likely to have experienced input lag at least once (but likely hundreds of times). On some platforms, it’s much worse than others, and it’s probably the number one reason we are asked about our device performance even though customers are coming to us to test their device performance.

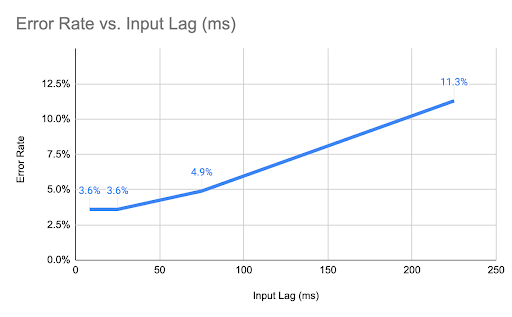

If you are one of the rare testers who feels that they haven’t experienced input lag and the impact it has on testing, here is a common real-world example: You scroll in a list view and there is no immediate response or the response is only partial. So, you scroll again, or you tap on one of the list items but the screen had not finished scrolling. In this latter case, your physical action results in the selection of the wrong item on the screen. This is where the test mistakes occur. The evidence for this isn’t just anecdotal. Research on the topic, and an Interchi-published study found that error rates increase three times from a standard 3.6% when lag time is less than 25ms to 11.3% when lag time is 225ms.2

The impact of testing errors really can’t be understated. Even if your team catches the error in time, your tester(s) are going to have to go back and re-run their tests, or development might even have to go and change something that they did in response to the faulty test therefore false result. This is the kind of thing that keeps releases behind, and when just a 225ms lag results in errors being made 11.3% of the time, you’re talking about a whole lot of time wasted. However, as bad as that is, that’s by no means the only implication of such an error rate. If your team doesn’t catch the issue in time, you may end up releasing an app that is critically flawed due to that critical flaw being missed by a test gone awry. Neither option is ideal.

As in all areas of our lives, we start to identify patterns of input lag. We begin to unconsciously expect it, and we compensate as a result. The problem here is that our compensation is hindered by our own natural limitations around response times.

A study conducted by Clemson found that the average human response time to visual stimuli is about 200ms for young adults. When that stimulus is visually fluid (think inconsequential input lag), like a scrolling list on a mobile device, our mind starts to predict where the movement is going which allows us to react faster than when the stimulus comes from a non-fluid source. The fluid source is no problem, as the human response time is able to combine reaction time and proprioception, and allows humans to respond within 100ms, which is consistent with the Interchi 1993 study.

However, input lag results in non-fluidity. When the lag unpredictably oscillates between acceptable values at 200ms and high values of 800ms, the human mind compensates for that, as well. But instead of predicting where the object is moving to, the mind starts to learn that it cannot rely on the input and starts to naturally compensate by waiting a little longer for each input to ensure it is no longer in motion. For example, when I test a mobile application, and the lag time unpredictably varies between 200ms and 800ms, I naturally start waiting until the 800ms point to make sure there is no further rendering delay. Over time, this results in a reduction in the Wasted Time cost, but an increase in the Wait Time cost. If I act against my natural inclination to wait, I’ll make errors and therefore increase Wasted Time to try and make up for potential Wait Time. Either way, I end up wasting valuable time and resources.

In addition to the very tangible Wait Time and Wasted Time costs, organizations also incur intangible costs in employee morale and customer satisfaction. As in every industry, the best testers will gravitate towards the organizations that provide them with the best tools to do their jobs effectively. People want to work for companies that invest in their overall experience at work, and until the craft beer stops flowing in young tech start-up offices, that’s not going to stop.

We won’t buy your office a kegerator, but we did just build the next-generation Mobile Device Cloud of the future. While we have had the highest-performing Mobile Device Cloud and input lag testing for some time now, we never wanted to stop at “best.” Instead, we aim for “even better,” and always envisioned device response, session data, system metrics, and video all streamed in real-time, with virtually no input lag.

That vision just became reality with Kobiton rapidRoute.

When working with Mobile Testing Cloud platforms, some portions of the communication traverses the public internet. When the device is in close proximity to the browser, the distance traveled across the public internet does not cause significant latency (and therefore input lag). However, when that distance is long, like someone in India performing a manual test against a device located in New York, network latency plays a greater role in lag time. Our brand new rapidRoute product minimizes this traffic between the browser and device, regardless of where they are both located, that traverses the public internet.

This equips your testing teams with true point of presence connection, instead of one central hub. All of this vastly improves performance and virtually eliminates variability or input lag between testers’ actions and the device response. With Kobiton rapidRoute, both Wait Time and Wasted Time are a thing of the past.

rapidRoute will allow for 30 frames per second (on iOS and Android) with a 200ms service level objective, even if the device and browser are located in different locations around the globe. You’ll test so fast that even development might struggle to keep up. The benefits of input lag testing are nearly endless!